A requirement I often hear is something like this: “We have a mailbox that receives emails of some kind that need to be processed somehow”. What options are there to fulfill the requirement? As always, it depends. When the items need to be processed as soon as possible and the client processing the items can be directly reached by the Exchange server, push notifications are certainly a good choice. If, on the other hand, item processing is not time critical a suitable method of processing those items is polling. It’s much simpler to use than push notifications. A simple example might look like this:

1: private static void ProcessItems(ExchangeService exchangeService)

2: {

3: var offset = 0;

4: const int pageSize = 100;

6: FindItemsResults<Item> result;

7: do

8: {

9: var view = new ItemView(pageSize, offset);

5:

10:

11: result = exchangeService.FindItems(WellKnownFolderName.Inbox, view);

12:

13: foreach (var item in result)

14: {

15: ProcessItem(item);

16: }

17: offset += pageSize;

18: } while (result.MoreAvailable);

19: }

This is a very naïve implementation as it always returns every item from the inbox folder of the mailbox. But at least it uses paging. This breaks the processing down from one very large request into many small requests (in this case 100 items are returned per request).

This method is suitable if you delete the processed items from a mailbox after they are processed. Of course, the items should not be removed until the every item has been processed. Otherwise items may be skipped since the offsets of the individual items change when an item is removed from the folder.

If the items are not removed from the store, the calling application must distinguish new items from items already processed. An obvious way to do this is to mark each item once it has been processed by marking it as read. This changes the requirement for the ProcessItems method: It should only process unread items. This modifications has been incorporated into the following example:

1: private static void ProcessItems(ExchangeService exchangeService)

2: {

3: var offset = 0;

4: const int pageSize = 100;

5:

6: FindItemsResults<Item> result;

7: do

8: {

9: var view = new ItemView(pageSize, offset)

10: {

11: SearchFilter = new SearchFilter.IsEqualTo(EmailMessageSchema.IsRead, false)

12: };

13:

14: result = exchangeService.FindItems(WellKnownFolderName.Inbox, view);

15:

16: foreach (var item in result)

17: {

18: ProcessItem(item);

19: }

20: offset += pageSize;

21: } while (result.MoreAvailable);

22: }

The only change in this example is in line 11: A search filter has been added to filter for unread items. What’s missing here is the modification of the Unread status of each message. And that is a considerable drawback of the whole solution. To touch each processed item, the UpdateItems method must be called. It is sufficient to call the UpdateItems method and pass all item ids from the last resultset to the method. But this adds a significant burden on the Exchange server and slows overall processing down. Furthermore, if someone accesses the mailbox (either with Outlook or Outlook Web Access) and accidently marks one or more items as read, those items will not be processed by the client application.

Next idea: Find all items that were received after the last time the mailbox was checked. A ProcessItems method that implements this behavior might look like this:

1: private static void ProcessItems(ExchangeService exchangeService, DateTime lastCheck)

2: {

3: var offset = 0;

4: const int pageSize = 100;

5:

6: FindItemsResults<Item> result;

7: do

8: {

9: var view = new ItemView(pageSize, offset)

10: {

11: SearchFilter = new SearchFilter.IsGreaterThanOrEqualTo(ItemSchema.DateTimeReceived, lastCheck)

12: };

13:

14: result = exchangeService.FindItems(WellKnownFolderName.Inbox, view);

15:

16: foreach (var item in result)

17: {

18: ProcessItem(item);

19: }

20: offset += pageSize;

21: } while (result.MoreAvailable);

22: }

As with the second example, the difference to the first one is line 11. A search filter has been added that restricts the FindItems call to those items received after a specific time. This method removes the requirement to mark each processed item on the server. But it adds another caveat: A mail that is received during a ProcessItems call will be missed if no additional checks are performed. This can be a little tricky.

Luckily, the Exchange WebServices offer a more suitable solution for the whole requirement: The SyncFolderItems method. This method not only solves the problems mentioned above but also returns deleted items, if such a processing is necessary. A ProcessItems method that uses this API now looks like this:

1: private static string SyncItems(ExchangeService exchangeService, string syncState)

2: {

3: const int pageSize = 100;

4:

5: ChangeCollection<ItemChange> changeCollection;

6: do

7: {

8: changeCollection = exchangeService.SyncFolderItems(new FolderId(WellKnownFolderName.Inbox),

9: new PropertySet(BasePropertySet.FirstClassProperties), null, pageSize,

10: SyncFolderItemsScope.NormalItems, syncState);

11:

12: foreach (var change in changeCollection)

13: {

14: if (change.ChangeType == ChangeType.Create)

15: {

16: ProcessItem(change.Item);

17: }

18: }

19: syncState = changeCollection.SyncState;

20:

21: } while (changeCollection.MoreChangesAvailable);

22: return syncState;

23: }

This method only processes newly created items and ignores all other item changes or deletions. The application calling this method needs to store the synchronization state between each call. If an empty sycnState is provided, Exchange will return every item from the mailbox as “Created” item. This makes it possible to process all existing items once and then, with the same logic, every changed item. The only drawback with this method is the fact that the synchronization state can become quite big (in a folder with ~4500 items, the syncstate has a size of approx. 60kb).

a8713921-074a-41b7-b8c3-61875ee3005a|12|5.0

Tags:

exchange 2007, exchange web services, finditems, syncfolderitems, push notifications, managed api

Technorati:

exchange+2007, exchange+web+services, finditems, syncfolderitems, push+notifications, managed+api

Technorati:

exchange+2007, exchange+web+services, finditems, syncfolderitems, push+notifications, managed+api

Under certain circumstances a program needs to determine the name of the computer it’s running on. The first approach to get this name is to use the System.Environment.MachineName property. However, this name only reflects the NETBIOS name of the current machine. But in larger environments a full-qualified name including the DNS domain the computer belongs to. This can be something like computername.contoso.local. One example where this full qualified name might be needed are Exchange Push notification. I’ve published a component to CodePlex makes it really easy to incorporate them in an application. However, for the notifications to reach the client the component needs to tell the Exchange server a correct callback address. In a very simple network environment, it is sufficient to specify the NETBIOS hostname. But in more complex environments, Exchange might not be able to send a notification because it cannot correctly resolve the unqualified hostname to an IP address.

The full qualified domain name of the current host can be resolved with a call tot the System.Net.NetworkInformation.IPGlobalProperties.GetIPGlobalProperties method. This method returns, among other things, the required information:

1: var ipGlobalProperties = IPGlobalProperties.GetIPGlobalProperties();

2: string fullQualifiedDomainName;

3:

4: if (!string.IsNullOrEmpty(ipGlobalProperties.DomainName))

5: {

6: fullQualifiedDomainName = string.Format("{0}.{1}", ipGlobalProperties.HostName, ipGlobalProperties.DomainName);

7: }

8: else

9: {

10: fullQualifiedDomainName = ipGlobalProperties.HostName;

11: }

I have updated the PushNotification component to reflect this new behavior.

677502cb-7e91-4273-8cae-8bb3af8a613c|2|5.0

Tags:

dns, full qualified domain name, ipglobalproperties, exchange, push notifications, exchange 2007, exchange web services

Technorati:

dns, full+qualified+domain+name, ipglobalproperties, exchange, push+notifications, exchange+2007, exchange+web+services

Technorati:

dns, full+qualified+domain+name, ipglobalproperties, exchange, push+notifications, exchange+2007, exchange+web+services

Microsoft just released a post about the technologies being removed from the next version of Exchange. I'm ok with WebDAV being removed, given that EWS will be extended to support access to hidden messages and providing strong-typed access to mailbox settings. I can also live with the fact that CdoEx and ExOleDB will be remove. But store events are another thing.

Sure, they are not really easy to implement and the whole access using ADO is a terrible mess. CdoEx makes it not exactly better as there is no support for tasks.

The proposed replacement for store event sinks are transport agents and EWS notification. While transport agents are fine when dealing with email messages, they are useless with respect to appointments, contacts and tasks. This leaves the EWS notifications as the sole option here. Why is this bad?

- Synchronous execution (access to old data as well): Synchronous event sinks (OnSyncSave, OnSyncDelete) provide direct access to the item being modified (the record is directly available). And during the Begin phase, the event sink can even open the old record and execute actions based on how fields where changed. This feature will be lost completely with EWS notifications.

- Register once, even for new users (storewide eventsinks): Store wide event sinks are registered once on a store and it will be triggered for every mailbox in the store - even new users. EWS notifications must be registered for each mailbox and the application receiving the mails is required to monitor Exchange for new mailboxes.

- Access to all properties, even during deletion of an object: With a synchronous OnSyncDelete event sink, all properties of an item can be examined before it gets deleted. With notifications I merely get a notification that and item with a specific ItemId has been deleted. The client application is responsible to track the deleted item - whether is was soft-deleted or moved to the recycle bin. The properties can then be accessed from there. But if the item was hard-deleted (in case of the dumpster being disabled on public folders, for example), one is out of luck. The real problem is this: The ItemId is based on the email address of the mailbox owner as well as the MAPI EntryId of the item (see Exchange 2007 SP1 Item ids). Both, the mailbox address as well as the MAPI entry id are not guaranteed to be stable (Since Exchange 2003 SP2, Exchange recreates meeting items under certain circumstances: CDOEX Calendaring Differences Between Exchange 2003 and Exchange 2003 SP2). This has the effect that the ItemId is not suitable as a long term identifier which should be stored in a database. In scenarios where Exchange data is being replicated to a relational databases, this can become a problem.

- Order of execution: Synchronous event sinks are called in the order the items were changed. With asynchronous notifications, this cannot be guaranteed.

To clarify this: The order in which notifications are sent to the client application cannot guaranteed to reflect the order in which the changes were made. To work around this issue, the each notification carries a watermark and a previous watermark. This way a client application can restore the correct order. But with synchronous store events, this comes for free. - Silent updates: Due to the possibility to modify an item while it's being changed, the synchronous store events allow some sort of silent update. This works like this:

- Along with all the other modifications, a client program sets a special user defined field to an arbitrary value.

- The event sink checks this field during the Begin phase, and if it's set it won't process the item. Instead it just removes the property from the element.

- Modify changes made by a user / Block modifications: A synchronous event sink can modify changes while they are being saved. It can even abort the change, thus preventing a user from deleting an item for example.

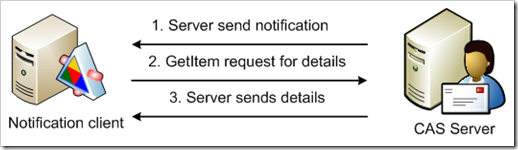

Note: Canceling updates/deletions can break synchronization with ActiveSync or Outlook. So don't do this! - Performance: In most scenarios, the WebService receiving the push notifications will not reside on the Exchange server (I know a bunch of administrators who even hesitate to install the .NET Framework on the Exchange server let alone software which does not come from MS*). In this case, the push notifications work like this:

This makes three network hops to get the properties of a changed item. What makes things worse is the fact that the notifications are send for each modified element. With store event sinks, one could specify a filter so that the sink was only triggered for a subset of elements.

So, while store event sinks are certainly no unconfined pleasure to use, they are far more suited for certain scenarios. I would rather see them being replaced by a managed solution (like transport agents) than the stuff that is coming now.

By the way, the OnTimer, OnMdbShutdown, OnMdbStartup event sinks are being removed without any replacement.

Enough ranting for one day...

* Now, one could argue that a store event sink has far more impact on the Exchange server and those administrators would also hesitate to install them... while that is technically true, there is no other option yet, so they have to swallow that pill.

63e834db-504e-429a-bc32-fea68ee74f70|5|5.0